The Sparse Hausdorff Moment Problem, with Application to Topic Models

Spencer Gordon, Bijan Mazaheri, Yuval Rabani, Leonard Schulman

April, 2019

Abstract

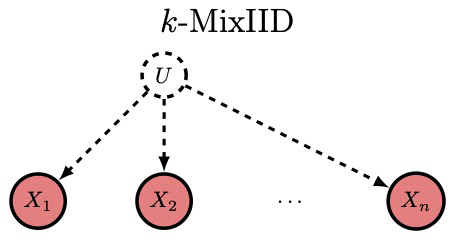

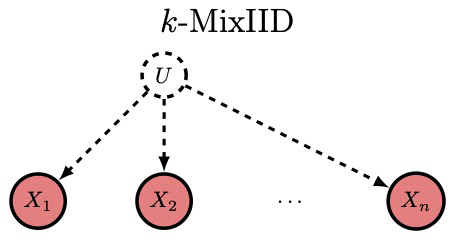

We consider the problem of identifying, from its first m noisy moments, a probability distribution on of support . This is equivalent to the problem of learning a distribution on m observable binary random variables that are iid conditional on a hidden random variable taking values in . Our focus is on accomplishing this with , which is the minimum for which verifying that the source is a -mixture is possible (even with exact statistics). This problem, so simply stated, is quite useful: e.g., by a known reduction, any algorithm for it lifts to an algorithm for learning pure topic models.

We give an algorithm for identifying a -mixture using samples of iid binary random variables using a sample of size and post-sampling runtime of only arithmetic operations. Here is the minimum probability of an outcome of , and is the minimum separation between the distinct success probabilities of the s. Stated in terms of the moment problem, it suffices to know the moments to additive accuracy . It is known that the sample complexity of any solution to the identification problem must be at least exponential in . Previous results demonstrated either worse sample complexity and worse runtime for some substantially larger than 2, or similar sample complexity and much worse runtime.

My interests include mixture models, high level data fusion, and stability to distribution shift - usually through the lense of causality.