Causal Inference Despite Limited Global Confounding via Mixture Models

Spencer Gordon, Bijan Mazaheri, Yuval Rabani, Leonard Schulman

April, 2023

Abstract

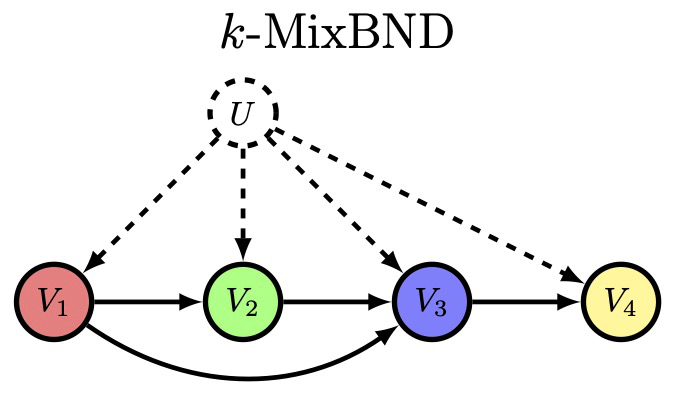

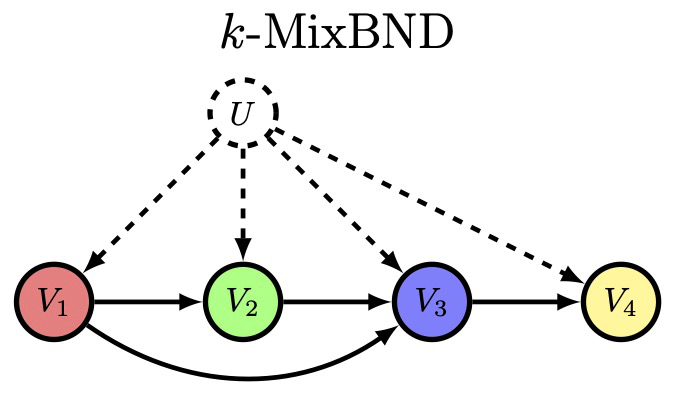

A Bayesian Network is a directed acyclic graph (DAG) on a set of random variables (the vertices); a Bayesian Network Distribution (BND) is a probability distribution on the random variables that is Markovian on the graph. A finite -mixture of such models is graphically represented by a larger graph which has an additional “hidden” (or “latent”) random variable , ranging in , and a directed edge from to every other vertex. Models of this type are fundamental to causal inference, where models an unobserved confounding effect of multiple populations, obscuring the causal relationships in the observable DAG. By solving the mixture problem and recovering the joint probability distribution on , traditionally unidentifiable causal relationships become identifiable. Using a reduction to the more well-studied “product” case on empty graphs, we give the first algorithm to learn mixtures of non-empty DAGs.

Publication

In 2nd Conference on Causal Learning and Reasoning

My interests include mixture models, high level data fusion, and stability to distribution shift - usually through the lense of causality.